UPDATE 20180413

Assembly complete. See end of post for data locations.

UPDATE 20180410

Received a status update email:

SLURM Job_id=156637 Name=20180405_sparse_assembler_kmer101_geo Ended, Run time 4-20:17:08, CANCELLED, ExitCode 0

After talking to Steven, it turns out Mox was taken offline for maintenance, which killed all jobs (and access). Ugh.

Will restart tonight once Mox is back online.

OK, here we go! Initiatied an assembly run using SparseAssembler on our Mox HPC node on all of our geoduck genomic sequencing data:

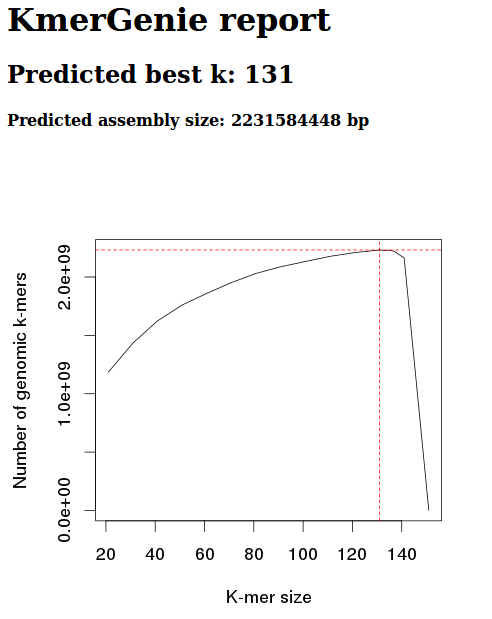

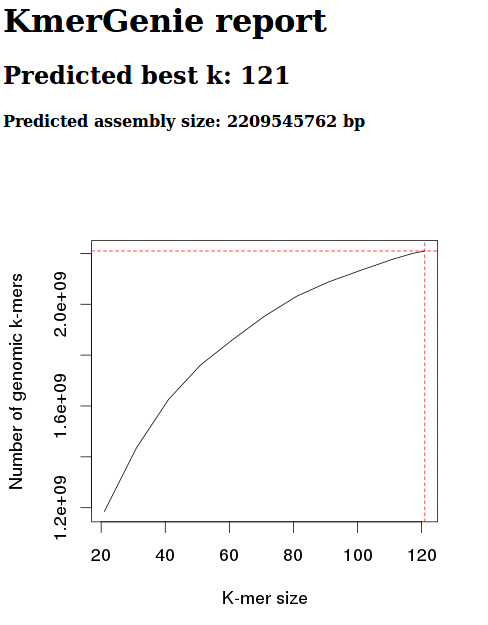

Kmer size set to 101.

This is 118 files of sequencing data!! Fingers crossed…

Slurm script: 20180405_sparse_assembler_kmer101_geoduck_slurm.sh

#!/bin/bash

## Job Name

#SBATCH --job-name=20180405_sparse_assembler_kmer101_geo

## Allocation Definition

#SBATCH --account=srlab

#SBATCH --partition=srlab

## Resources

## Nodes (We only get 1, so this is fixed)

#SBATCH --nodes=1

## Walltime (days-hours:minutes:seconds format)

#SBATCH --time=30-00:00:00

## Memory per node

#SBATCH --mem=500G

##turn on e-mail notification

#SBATCH --mail-type=ALL

#SBATCH --mail-user=samwhite@uw.edu

## Specify the working directory for this job

#SBATCH --workdir=/gscratch/scrubbed/samwhite/20180405_sparseassembler_kmer101_geoduck

/gscratch/srlab/programs/SparseAssembler/SparseAssembler \

LD 0 \

NodeCovTh 1 \

EdgeCovTh 0 \

k 101 \

g 15 \

PathCovTh 100 \

GS 2200000000 \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/AD002_S9_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/AD002_S9_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/AD002_S9_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/AD002_S9_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR005_S4_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR005_S4_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR005_S4_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR005_S4_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR006_S3_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR006_S3_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR006_S3_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR006_S3_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR012_S1_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR012_S1_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR012_S1_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR012_S1_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR013_AD013_S2_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR013_AD013_S2_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR013_AD013_S2_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR013_AD013_S2_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR014_AD014_S5_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR014_AD014_S5_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR014_AD014_S5_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR014_AD014_S5_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR015_AD015_S6_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR015_AD015_S6_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR015_AD015_S6_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR015_AD015_S6_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR019_S7_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR019_S7_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR019_S7_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR019_S7_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR021_S8_L001_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR021_S8_L001_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR021_S8_L002_R1_001_val_1_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/20180129_trimgalore_geoduck_novaseq/NR021_S8_L002_R2_001_val_2_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/151114_I191_FCH3Y35BCXX_L1_wHAIPI023989-79_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/151114_I191_FCH3Y35BCXX_L1_wHAIPI023989-79_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/151114_I191_FCH3Y35BCXX_L2_wHAMPI023988-81_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/151114_I191_FCH3Y35BCXX_L2_wHAMPI023988-81_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/151122_I136_FCH3L2FBBXX_L7_wHAXPI023990-97_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/151122_I136_FCH3L2FBBXX_L7_wHAXPI023990-97_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L3_WHPANwalDDAADWAAPEI-101_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L3_WHPANwalDDAADWAAPEI-101_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L4_WHPANwalDDAADWAAPEI-101_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L4_WHPANwalDDAADWAAPEI-101_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L5_WHPANwalDDABDLAAPEI-100_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L5_WHPANwalDDABDLAAPEI-100_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L5_WHPANwalDDACDTAAPEI-102_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L5_WHPANwalDDACDTAAPEI-102_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L6_WHPANwalDDABDLAAPEI-100_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L6_WHPANwalDDABDLAAPEI-100_2.fastq \

i1 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L6_WHPANwalDDACDTAAPEI-102_1.fastq \

i2 /gscratch/scrubbed/samwhite/bgi_geoduck/160103_I137_FCH3V5YBBXX_L6_WHPANwalDDACDTAAPEI-102_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-1_S1_L001_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-1_S1_L001_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2_S5_L002_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2_S5_L002_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-1_S2_L001_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-1_S2_L001_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-2_S6_L002_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-2_S6_L002_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-3_S10_L003_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-3_S10_L003_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-4_S14_L004_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-4_S14_L004_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-5_S18_L005_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-5_S18_L005_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-6_S22_L006_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-6_S22_L006_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-7_S26_L007_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-7_S26_L007_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-8_S30_L008_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-2to4kb-8_S30_L008_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-3_S9_L003_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-3_S9_L003_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-4_S13_L004_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-4_S13_L004_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5_S17_L005_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5_S17_L005_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-1_S3_L001_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-1_S3_L001_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-2_S7_L002_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-2_S7_L002_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-3_S11_L003_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-3_S11_L003_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-4_S15_L004_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-4_S15_L004_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-5_S19_L005_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-5_S19_L005_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-6_S23_L006_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-6_S23_L006_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-7_S27_L007_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-7_S27_L007_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-8_S31_L008_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-5to7kb-8_S31_L008_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-6_S21_L006_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-6_S21_L006_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-7_S25_L007_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-7_S25_L007_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8_S29_L008_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8_S29_L008_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-1_S4_L001_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-1_S4_L001_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-2_S8_L002_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-2_S8_L002_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-3_S12_L003_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-3_S12_L003_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-4_S16_L004_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-4_S16_L004_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-5_S20_L005_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-5_S20_L005_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-6_S24_L006_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-6_S24_L006_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-7_S28_L007_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-7_S28_L007_R2_001_val_2.fastq \

i1 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-8_S32_L008_R1_001_val_1.fastq \

i2 /gscratch/scrubbed/samwhite/illumina_geoduck_hiseq/20180328_trim_galore_illumina_hiseq_geoduck/Geoduck-NMP-gDNA-8to10kb-8_S32_L008_R2_001_val_2.fastq

Results:

Output folder: 20180405_sparseassembler_kmer101_geoduck/

Slurm output files:

SparseAssembler Assembly (FASTA): Contigs.txt

Added this to the GitHub wiki for our geoduck genome assemblies.